synthcity.plugins.privacy.plugin_adsgan module

- Reference: Jinsung Yoon, Lydia N. Drumright, Mihaela van der Schaar,

“Anonymization through Data Synthesis using Generative Adversarial Networks (ADS-GAN): A harmonizing advancement for AI in medicine,” IEEE Journal of Biomedical and Health Informatics (JBHI), 2019.

Paper link: https://ieeexplore.ieee.org/document/9034117

- class AdsGANPlugin(n_iter: int = 10000, generator_n_layers_hidden: int = 2, generator_n_units_hidden: int = 500, generator_nonlin: str = 'relu', generator_dropout: float = 0.1, generator_opt_betas: tuple = (0.5, 0.999), discriminator_n_layers_hidden: int = 2, discriminator_n_units_hidden: int = 500, discriminator_nonlin: str = 'leaky_relu', discriminator_n_iter: int = 1, discriminator_dropout: float = 0.1, discriminator_opt_betas: tuple = (0.5, 0.999), lr: float = 0.001, weight_decay: float = 0.001, batch_size: int = 200, random_state: int = 0, clipping_value: int = 1, lambda_gradient_penalty: float = 10, lambda_identifiability_penalty: float = 0.1, encoder_max_clusters: int = 5, encoder: Any = None, dataloader_sampler: Optional[torch.utils.data.sampler.Sampler] = None, device: Any = device(type='cpu'), adjust_inference_sampling: bool = False, patience: int = 5, patience_metric: Optional[synthcity.metrics.weighted_metrics.WeightedMetrics] = None, n_iter_print: int = 50, n_iter_min: int = 100, workspace: pathlib.Path = PosixPath('workspace'), compress_dataset: bool = False, sampling_patience: int = 500, **kwargs: Any)

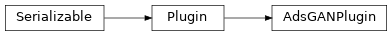

Bases:

synthcity.plugins.core.plugin.Plugin

AdsGAN plugin - Anonymization through Data Synthesis using Generative Adversarial Networks.

- Parameters

generator_n_layers_hidden – int Number of hidden layers in the generator

generator_n_units_hidden – int Number of hidden units in each layer of the Generator

generator_nonlin – string, default ‘leaky_relu’ Nonlinearity to use in the generator. Can be ‘elu’, ‘relu’, ‘selu’ or ‘leaky_relu’.

n_iter – int Maximum number of iterations in the Generator.

generator_dropout – float Dropout value. If 0, the dropout is not used.

discriminator_n_layers_hidden – int Number of hidden layers in the discriminator

discriminator_n_units_hidden – int Number of hidden units in each layer of the discriminator

discriminator_nonlin – string, default ‘leaky_relu’ Nonlinearity to use in the discriminator. Can be ‘elu’, ‘relu’, ‘selu’ or ‘leaky_relu’.

discriminator_n_iter – int Maximum number of iterations in the discriminator.

discriminator_dropout – float Dropout value for the discriminator. If 0, the dropout is not used.

lr – float learning rate for optimizer.

weight_decay – float l2 (ridge) penalty for the weights.

batch_size – int Batch size

random_state – int random seed to use

clipping_value – int, default 0 Gradients clipping value. Zero disables the feature

encoder_max_clusters – int The max number of clusters to create for continuous columns when encoding

adjust_inference_sampling – bool Adjust the marginal probabilities in the synthetic data to closer match the training set. Active only with the ConditionalSampler

lambda_gradient_penalty – float = 10 Weight for the gradient penalty

lambda_identifiability_penalty – float = 0.1 Weight for the identifiability penalty, if enabled

stopping (# early) –

n_iter_print – int Number of iterations after which to print updates and check the validation loss.

n_iter_min – int Minimum number of iterations to go through before starting early stopping

patience – int Max number of iterations without any improvement before training early stopping is trigged.

patience_metric – Optional[WeightedMetrics] If not None, the metric is used for evaluation the criterion for training early stopping.

arguments (# Core Plugin) –

workspace – Path. Optional Path for caching intermediary results.

compress_dataset – bool. Default = False. Drop redundant features before training the generator.

sampling_patience – int. Max inference iterations to wait for the generated data to match the training schema.

Example

>>> from sklearn.datasets import load_iris >>> from synthcity.plugins import Plugins >>> >>> X, y = load_iris(as_frame = True, return_X_y = True) >>> X["target"] = y >>> >>> plugin = Plugins().get("adsgan", n_iter = 100) >>> plugin.fit(X) >>> >>> plugin.generate(50)

- fit(X: Union[synthcity.plugins.core.dataloader.DataLoader, pandas.core.frame.DataFrame], *args: Any, **kwargs: Any) Any

Training method the synthetic data plugin.

- Parameters

X – DataLoader. The reference dataset.

cond –

Optional, Union[pd.DataFrame, pd.Series, np.ndarray] Optional Training Conditional. The training conditional can be used to control to output of some models, like GANs or VAEs. The content can be anything, as long as it maps to the training dataset X. Usage example:

>>> from sklearn.datasets import load_iris >>> from synthcity.plugins.core.dataloader import GenericDataLoader >>> from synthcity.plugins.core.constraints import Constraints >>> >>> # Load in `test_plugin` the generative model of choice >>> # .... >>> >>> X, y = load_iris(as_frame=True, return_X_y=True) >>> X["target"] = y >>> >>> X = GenericDataLoader(X) >>> test_plugin.fit(X, cond=y) >>> >>> count = 10 >>> X_gen = test_plugin.generate(count, cond=np.ones(count)) >>> >>> # The Conditional only optimizes the output generation >>> # for GANs and VAEs, but does NOT guarantee the samples >>> # are only from that condition. >>> # If you want to guarantee that output contains only >>> # "target" == 1 samples, use Constraints. >>> >>> constraints = Constraints( >>> rules=[ >>> ("target", "==", 1), >>> ] >>> ) >>> X_gen = test_plugin.generate(count, >>> cond=np.ones(count), >>> constraints=constraints >>> ) >>> assert (X_gen["target"] == 1).all()

- Returns

self

- classmethod fqdn() str

The Fully-Qualified name of the plugin.

- generate(count: Optional[int] = None, constraints: Optional[synthcity.plugins.core.constraints.Constraints] = None, random_state: Optional[int] = None, **kwargs: Any) synthcity.plugins.core.dataloader.DataLoader

Synthetic data generation method.

- Parameters

count – optional int. The number of samples to generate. If None, it generated len(reference_dataset) samples.

cond – Optional, Union[pd.DataFrame, pd.Series, np.ndarray]. Optional Generation Conditional. The conditional can be used only if the model was trained using a conditional too. If provided, it must have count length. Not all models support conditionals. The conditionals can be used in VAEs or GANs to speed-up the generation under some constraints. For model agnostic solutions, check out the constraints parameter.

constraints –

optional Constraints. Optional constraints to apply on the generated data. If none, the reference schema constraints are applied. The constraints are model agnostic, and will filter the output of the generative model. The constraints are a list of rules. Each rule is a tuple of the form (<feature>, <operation>, <value>).

- Valid Operations:

”<”, “lt” : less than <value>

”<=”, “le”: less or equal with <value>

”>”, “gt” : greater than <value>

”>=”, “ge”: greater or equal with <value>

”==”, “eq”: equal with <value>

”in”: valid for categorical features, and <value> must be array. for example, (“target”, “in”, [0, 1])

”dtype”: <value> can be a data type. For example, (“target”, “dtype”, “int”)

- Usage example:

>>> from synthcity.plugins.core.constraints import Constraints >>> constraints = Constraints( >>> rules=[ >>> ("InterestingFeature", "==", 0), >>> ] >>> ) >>> >>> syn_data = syn_model.generate( count=count, constraints=constraints ).dataframe() >>> >>> assert (syn_data["InterestingFeature"] == 0).all()

random_state – optional int. Optional random seed to use.

- Returns

<count> synthetic samples

- static hyperparameter_space(**kwargs: Any) List[synthcity.plugins.core.distribution.Distribution]

Returns the hyperparameter space for the derived plugin.

- static load(buff: bytes) Any

- static load_dict(representation: dict) Any

- static name() str

The name of the plugin.

- plot(plt: Any, X: synthcity.plugins.core.dataloader.DataLoader, count: Optional[int] = None, plots: list = ['marginal', 'associations', 'tsne'], **kwargs: Any) Any

Plot the real-synthetic distributions.

- Parameters

plt – output

X – DataLoader. The reference dataset.

- Returns

self

- classmethod sample_hyperparameters(*args: Any, **kwargs: Any) Dict[str, Any]

Sample value from the hyperparameter space for the current plugin.

- classmethod sample_hyperparameters_optuna(trial: Any, *args: Any, **kwargs: Any) Dict[str, Any]

- save() bytes

- save_dict() dict

- save_to_file(path: pathlib.Path) bytes

- schema() synthcity.plugins.core.schema.Schema

The reference schema

- schema_includes(other: Union[synthcity.plugins.core.dataloader.DataLoader, pandas.core.frame.DataFrame]) bool

Helper method to test if the reference schema includes a Dataset

- Parameters

other – DataLoader. The dataset to test

- Returns

bool, if the schema includes the dataset or not.

- training_schema() synthcity.plugins.core.schema.Schema

The internal schema

- static type() str

The type of the plugin.

- static version() str

API version

- plugin

alias of

synthcity.plugins.privacy.plugin_adsgan.AdsGANPlugin